The cochlea: operating principle

Thanks to what we know of physics and acoustics in general, we know that the sound is constituted by the propagation of an oscillation in an elastic medium such as air or water or even a metal.

Who practice of electronics knows that to this oscillation, by the use of a suitable transducer, a microphone, you can match an analogous electrical oscillation. Who practice of computer science knows that this electrical signal can match a numeric value, and the succession in time of many numerical values is representative of the variation of the original signal. This process can be followed in reverse and end up with the oscillating membrane of another appropriate transducer, the speaker that reproduces the sound to start.

The above is the principle on which they are based all digital audio devices of which today we experience, from CDs to MP3 players.

Having seen how the devices that operate in this manner are common and widespread, we would think that in the cochlea there is not more than the equivalent of an organic microphone that adjusts a nerve signal in proportion to oscillation that is transmitted by the ossicles. Then, as in MP3 players there is a discretization of the signal (that is, a precise numerical value is assigned to the intensity of the signal at a given instant), you may think that the hair cells simply emit discrete discharges in proportion to the intensity of vibration which they are subjected.

This is only partly true. The reality is a bit more complicated. Let’s see why.

By convention, we assume that the spectrum of audible by humans extends from 20 to 20,000. Honestly, I doubt that most of the people is really able to hear anything above 16kHz, to be optimistic.

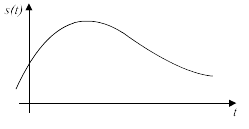

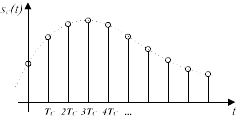

The Shannon’s theorem tells us that, in order to reconstruct a wave after it has been sampled, it is necessary that the sampling frequency is at least twice the maximum frequency that you want to achieve. This is the reason why the audio CD are sampled with a frequency of 44.1kHz, to be able to play up to 20kHz. In other words, for every second of music to be stored on an electronic medium, are written 44100 numeric values. In other words, between a value and the next, there is a time interval of about two hundred thousandths of a second. In the images to the right is represented the transition from an analog signal, at the top represented by a continuous curve, as can be the pressure variation of the Air on the airdrum vs. time, and below the same sampled signal. Instead of a continuous curve we have a set of values, equally spaced in time, which represent, in the previous example, the value of the pressure at regular intervals.

The Shannon’s theorem tells us that, in order to reconstruct a wave after it has been sampled, it is necessary that the sampling frequency is at least twice the maximum frequency that you want to achieve. This is the reason why the audio CD are sampled with a frequency of 44.1kHz, to be able to play up to 20kHz. In other words, for every second of music to be stored on an electronic medium, are written 44100 numeric values. In other words, between a value and the next, there is a time interval of about two hundred thousandths of a second. In the images to the right is represented the transition from an analog signal, at the top represented by a continuous curve, as can be the pressure variation of the Air on the airdrum vs. time, and below the same sampled signal. Instead of a continuous curve we have a set of values, equally spaced in time, which represent, in the previous example, the value of the pressure at regular intervals.

Since our nervous system is made up of neurons, our cochlear transducer is in need of actually make a sampling of the signal, because neurons operate in discrete pulses, but collides with the physiological limit of the neurons themselves, which are able to generate a pulse at intervals to a minimum of a few tenths of a millisecond.

This poses a major limitation to the maximum frequency attainable. In fact, the neurons are able to perform a synthesis of the two effects: the tone coding via the tonotopic arrangement along the basement membrane, of which we speak in the next section, and a using a system of phase lock.

Moreover, even if the inner ear was able to really achieve a sampling of the signal at that frequency, this flow of information, once received by the brain, it should be subjected to a processing extremely onerous. In fact, without imagining a re-conversion of the signal into an analogic one, the brain would be to perform some discrete Fourier transforms in order to filter the signal and extract the information, processing tens of thousands of values per second.

With the available hardware, the nature would not have been able to get a working system in real time in the same way they work today electronic devices.

Then th nature followed a different path: she created a mechanical spectrum analyzer, or electromechanical if you prefer.

The mechanism of operation of the cochlea is not that of a microphone which detects the sound intensity moment by moment and associates some kind of nervous stimulation. It is instead a system that associates specific nervous stimuli to each frequency present in the perceived sound!

That is, at a given moment, for example, if we are listening to the sound of a contrabass, there will be some hair cells, dedicated to low frequencies, that will send a strong impulse to the brain, plus some impulse, much less intense, produced by the cells dedicated to the high frequencies, which are excited by the sound of the rubbing of the bow’s horsehair across the strings.

That above is the operating principle of our biological spectrum analyzer. In the next section we will see how the nature has achieved this goal.

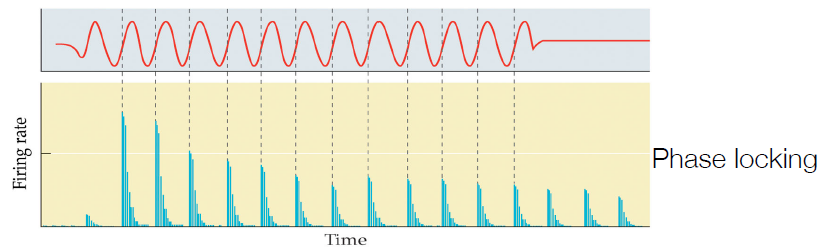

Il sistema acustico utilizza un secondo sistema per codificare le varie frequenze oltre alla codifica tonotopica della coclea.

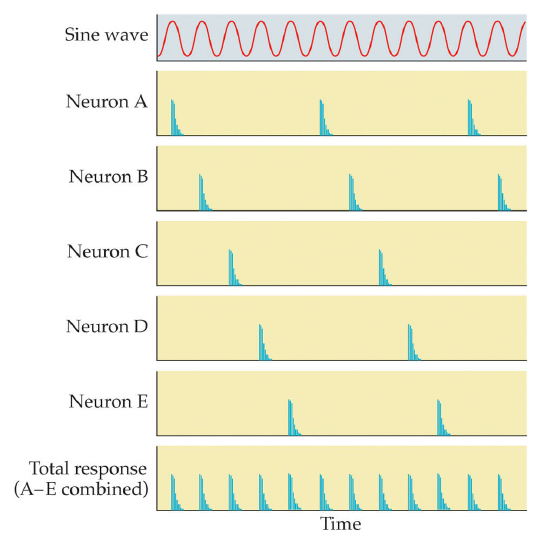

Fino a frequenze nell'ordine dei 3kHz la coclea può utilizzare direttamente un sistema di aggancio di fase in cui un singolo neurone può scaricare in un determinato punto dell'onda sonora ad una certa frequenza. I pattern di scarica determinano quindi un codice temporale.

Per frequenze più alte, fino a 5kHz, è stato proposto il principio di scarica tramite il quale è necessaria la collaborazione di più neuroni per ricostruire il pattern.

Oltre i 5kHz la velocità di scarica dei neuroni è comunque troppo lenta, quindi per i suoni più acuti il cervello si deve basare solo su informazioni derivanti dalla zona di membrana oggetto dell'eccitazione.

-0

-0  )

)

Leave a Reply